How reproducible are real-world studies using clinical practice data?

That is the question posed by a paper by Wang, Sreedhara and Schneeweiss (2022). The authors replicate the findings from n=250 real-world data studies. The studies were replicated using 3 datasets: (i) Optum Clinformatics claims data, (ii) IBM MarketScan Research Database claims data, Medicare fee-for-service claims data, and (iii) CPRD electronic health records. Key paper findings are below:

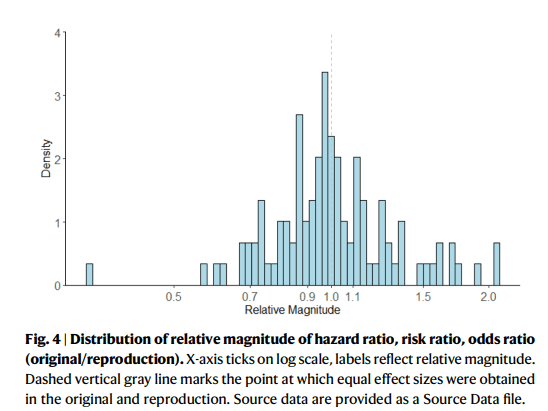

Original and reproduction effect sizes were positively correlated (Pearson’s correlation = 0.85), a strong relationship with some room for improvement. The median and interquartile range for the relative magnitude of effect (e.g., hazard ratiooriginal/ hazard ratioreproduction) is 1.0 [0.9, 1.1], range [0.3, 2.1]. While the majority of results are closely reproduced, a subset are not. The latter can be explained by incomplete reporting and updated data. Greater methodological transparency aligned with new guidance may further improve reproducibility and validity assessment, thus facilitating evidence-based decision-making

https://www.nature.com/articles/s41467-022-32310-3

This paper won the 2023 Award for Excellence in Health Economics and Outcomes Research Methodology. You can read the full paper here.