'But is it fair?': AI systems show promise but questions remain

A “for sale” sign outside of a home in Atlanta in February. More companies are exploring ways to use AI in mortgage origination decisions.

Bloomberg News

A potentially scary, or intriguing thought, depending on your worldview: Whether you are approved for a mortgage could hinge upon the type of yogurt you purchase.

Buying the more daring and worldly Siggi’s — a fancy imported Icelandic brand — could mean you achieve the American Dream while enjoying the more pedestrian choice of Yoplait’s whipped strawberry flavor could lead to another year of living in your parents’ basement.

Consumer habits and preferences can be used by machine learning or artificial intelligence-powered systems to build a financial profile of an applicant. In this evolving field, the data used to determine a person’s creditworthiness could include anything from subscriptions to certain streaming services to applying for a mortgage in an area with a higher rate of defaults to even a penchant for purchasing luxury products — the Siggi’s brand of yogurt, for instance.

Unlike the recent craze with AI-powered bots, such as ChatGPT, machine learning technology involved in the lending process has been around for at least half a decade. But a greater awareness of this technology in the cultural zeitgeist, and fresh scrutiny from regulators have many weighing both its potential benefits and the possible unintended — and negative — consequences.

AI-driven decision-making is advertised as a more holistic way of assessing a borrower than solely relying on traditional methods, such as credit reports, which can be disadvantageous for some socio-economic groups and result in more denials of loan applications or in higher interest rates being charged.

Companies in the financial services sector, including Churchill Mortgage, Planet Home Lending, Discover and Citibank, have started experimenting with using this technology during the underwriting process.

The AI tools could offer a fairer risk assessment of a borrower, according to Sean Kamar, vice president of data science at Zest AI, a technology company that builds software for lending.

“A more accurate risk score allows lenders to be more confident about the decision that they’re making,” he said. “This is also a solution that mitigates any kind of biases that are present.”

But despite the promise of more equitable outcomes, additional transparency about how these tools learn and make choices may be needed before broad adoption is seen across the mortgage industry. This is partially due to ongoing concerns about a proclivity for discriminatory lending practices.

AI-powered systems have been under the watchful eye of agencies responsible for enforcing consumer protection laws, such as the Consumer Financial Protection Bureau.

“Companies must take responsibility for the use of these tools,” Rohit Chopra, the CFPB’s director, warned during a recent interagency press briefing about automated systems. “Unchecked AI poses threats to fairness and our civil rights,” he added.

Stakeholders in the AI industry expect standards to be rolled out by regulators in the near future, which could require companies to disclose their secret sauce — what variables they use to make decisions.

Companies involved in building this type of technology welcome guardrails, seeing them as a necessary burden that can result in greater clarity and more future customers.

The world of automated systems

In the analog world, a handful of data points provided by one of the credit reporting agencies, such as Equifax, Experian or TransUnion, help to determine whether a borrower qualifies for a mortgage.

A summary report is issued by these agencies that outlines a borrower’s credit history, the number of credit accounts they’ve had, payment history and bankruptcies. From this information, a credit score is calculated and used in the lending decision.

Credit scores are “a two-edged sword,” explained David Dworkin, CEO of the National Housing Conference.

“On the one hand, the score is highly predictive of the likelihood of [default],” he said. “And, on the other hand, the scoring algorithm clearly skews in favor of a white traditional, upper middle class borrower.”

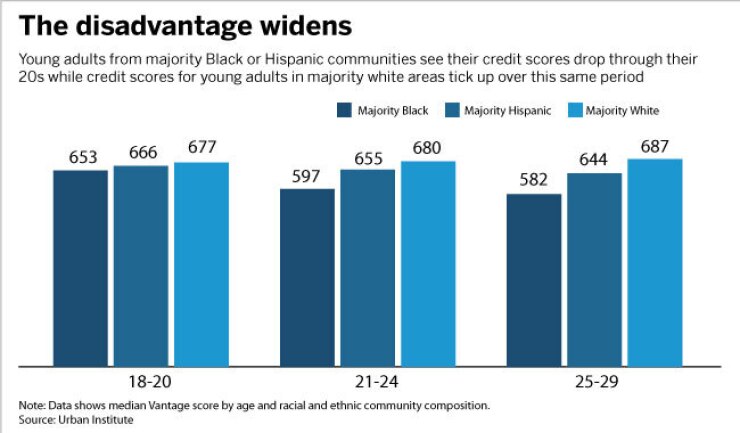

This pattern begins as early as young adulthood for borrowers. A report published by the Urban Institute in 2022 found that young minority groups experience “deteriorating credit scores” compared to white borrowers. From 2010 to 2021, almost 33% of Black 18-to-29-year-olds and about 26% of Hispanic people in that age group saw their credit score drop, compared with 21% of young adults in majority-white communities.

That points to “decades of systemic racism” when it comes to traditional credit scoring, the nonprofit’s analysis argues. The selling point of underwriting systems powered by machine learning is that they rely on a much broader swath of data and can analyze it in a more nuanced, nonlinear way, which can potentially minimize bias, industry stakeholders said.

“These algorithms can give us the optimal value of each individual so you don’t put people in a bucket anymore and the decision becomes more personalized, which is supposedly much better,” said Subodha Kumar, data science professor at Temple University.

“The old way of underwriting loans is relying on FICO calculations,” said Subodha Kumar, data science professor at Temple University in Philadelphia. “But the newer technologies can look at [e-commerce and purchase data], such as the yogurt you buy to help in predicting whether you’ll pay your loan or not. These algorithms can give us the optimal value of each individual so you don’t put people in a bucket anymore and the decision becomes more personalized, which is supposedly much better.”

An example of how a consumer’s purchase decisions may be used by automated systems to determine creditworthiness are displayed in a research paper published in 2021 by the University of Pennsylvania, which found a correlation between products consumers buy at a grocery store and the financial habits that shape credit behaviors.

The paper concluded that applicants who buy things such as fresh yogurt or imported snacks fall into the category of low-risk applicants. In contrast, those who add canned food and deli meats and sausages to their carts land in the more likely to default category because their purchases are “less time-intensive…to transform into consumption.”

Though technology companies interviewed denied using such data points, most do rely on a more creative approach to determine whether a borrower qualifies for a loan. According to Kamar, Zest AI’s underwriting system can distinguish between a “safe borrower” who has high utilization and a consumer whose spending habits pose risk.

“[If you have a high utilization, but you are consistently paying off your debt] you’re probably a much safer borrower than somebody who has very high utilization and is constantly opening up new lines of credit,” Kamar said. “Those are two very different borrowers, but that difference is not seen by more simpler, linear models.”

Meanwhile, TurnKey Lender, a technology company that also has an automated underwriting system that pulls standard data, such as personal information, property information and employment, but can also analyze more “out-of-the-box” data to determine a borrower’s creditworthiness. Their web platform, which handles origination, underwriting, and credit reporting, can look at algorithms that predict the future behavior of the client, according to Vit Arnautov, chief product officer at TurnKey.

The company’s technology can analyze “spending transactions on an account and what the usual balance is,” added Arnautov. This helps to analyze income and potential liabilities for lending institutions. Additionally, TurnKey’s system can create a heatmap “to see how many delinquencies and how many bad loans are in an area where a borrower lives or is trying to buy a house.”

Bias concerns

Automated systems that pull alternative information could make lending more fair, or, some worry, they could do the exact opposite.

“The challenges that typically happen in systems like these [are] from the data used to train the system,” said Jayendran GS, CEO of Prudent AI, a lending decision platform built for non-qualified mortgage lenders. “The biases typically come from the data.

“If I need to teach you how to make a cup of coffee, I will give you a set of instructions and a recipe, but if I need to teach you how to ride a bicycle, I’m going to let you try it and eventually you’ll learn,” he added. “AI systems tend to work like the bicycle model.”

If the quality of the data is “not good,” the autonomous system could make biased, or discriminatory decisions. And the opportunities to ingest potentially biased data are ample, because “your input is the entire internet and there’s a lot of crazy stuff out there,” noted Dworkin.

“I think that when we look at the whole issue, it’s if we do it right, we could really remove bias from the system completely, but we can’t do that unless we have a lot of intentionality behind it,” Dworkin added. Fear of bias is why government agencies, specifically the CFPB, have been wary of AI-powered platforms making lending decisions without proper guardrails. The government watchdog has expressed skepticism about the use of predictive analytics, algorithms, and machine learning in underwriting, warning that it can also reinforce “historical biases that have excluded too many Americans from opportunities.”

Most recently, the CFPB along with the Civil Rights Division of the Department of Justice, Federal Trade Commission, and the Equal Employment Opportunity Commission warned that automated systems may perpetuate discrimination by relying on nonrepresentative datasets. They also criticized the lack of transparency around what variables are actually used to make a lending determination.

Though no guidelines have been set in stone, stakeholders in the AI space expect regulations to be implemented soon. Future rules could require companies to disclose exactly what data is being used and explain why they are using said variables to regulators and customers, said Kumar, the Temple professor.

“Going forward maybe these systems use 17 variables instead of the 20 they were relying on because they are not sure how these other three are playing a role,” said Kumar. “We may need to have a trade-off in accuracy for fairness and explainability.”

This notion is welcomed by players in the AI space who see regulations as something that could broaden adoption.

“We’ve had very large customers that have gotten very close to a partnership deal [with us] but at the end of the day it got canceled because they didn’t want to stick their neck out because they were concerned with what might happen, not knowing how future rulings may impact this space,” said Zest AI’s Kamar. “We appreciate and invite government regulators to make even stronger positions with regard to how much is absolutely critical for credit underwriting decisioning systems to be fully transparent and fair.”

Some technology companies, such as Prudent AI, have also been cautious about including alternative data because of a lack of regulatory guidance. But once guidelines are developed around AI in lending, GS noted that he would consider expanding the capabilities of Prudent AI’s underwriting system.

“The lending decision is a complicated decision and bank statements are only a part of the decision,” said GS. “We are happy to look at extending our capabilities to solve problems, with other documents as well, but there has to be a level of data quality and we feel that until you have reliable data quality, autonomy is dangerous.”

As potential developments surrounding AI-lending evolve, one point is clear: it is better to live with these systems than without them.

“Automated underwriting, for all of its faults, is almost always going to be better than the manual underwriting of the old days when you had Betty in the back room, with her calculator and whatever biases Betty might have had,” said Dworkin, the head of NHC. “I think at the end of the day, common sense really dictates a lot of how [the future landscape of automated systems will play out] but anybody who thinks they’re going to be successful in defeating the Moore’s Law of technology is fooling themselves.”