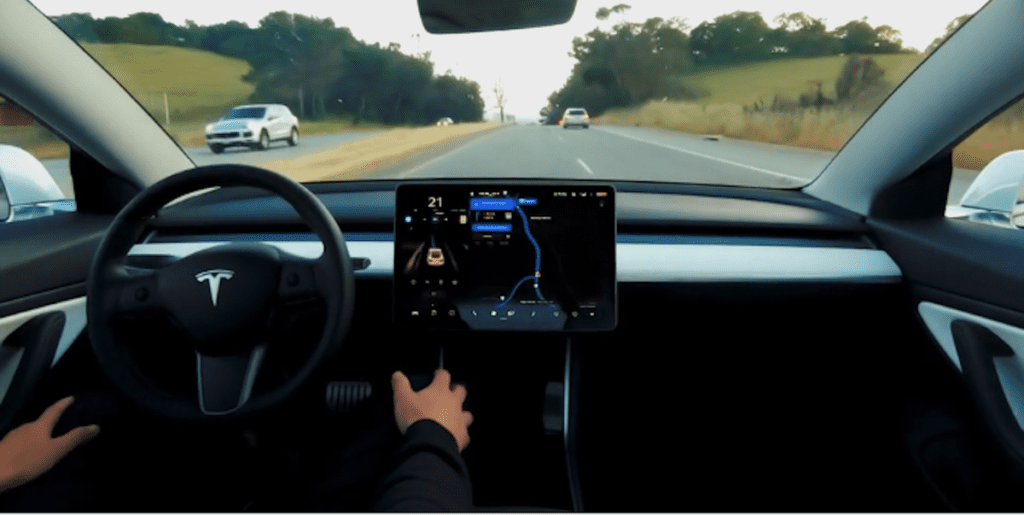

Tesla Recalls 362,758 Cars over Full Self-Driving Crash Risk

Tesla is recalling 362,758 vehicles over issues with the Full Self-Driving software that allows vehicles to exceed speed limits or drive through intersections in an unlawful or unpredictable manner, according to filings with the National Highway Traffic Safety Administration (NHTSA).The issues affect a range of years throughout the full lineup, including certain Model 3, Model X, Model Y and Model S units manufactured between 2016 and 2023. Tesla said it will issue a free over-the-air (OTA) software update for the affected vehicles and will send notification letters to owners by April 15, 2023.

Tesla is recalling hundreds of thousands of vehicles over safety issues regarding the company’s Full Self-Driving (FSD Beta) automated-driving software. The recall affects a total of 362,758 vehicles including certain Model 3, Model X, Model Y, and Model S EVs manufactured between 2016 and 2023.

Filings with NHTSA show that vehicles using the FSD Beta may act in an unsafe manner, with particular concern over intersections. Vehicles may potentially travel directly through an intersection while in a turn-only lane, enter a stop-sign-controlled intersection without coming to a complete stop, or proceed into an intersection during a steady yellow traffic signal without due caution, according to NHTSA documents. The software may also fail to recognize changes in posted speed limits, and fail to slow the vehicle down when entering a slower-traffic area.

Tesla will release an over-the-air (OTA) software update for the problem free of charge. Owner notification letters are expected to be mailed by April 15, 2023. Owners may contact Tesla customer service at 877–798–3752. Tesla’s number for this recall is SB-23-00-001.

NHTSA’s Office of Defects Investigation opened a preliminary investigation into the performance of FSD. The investigation was motivated by an accumulation of crashes in which Tesla vehicles, operating with Autopilot engaged, struck stationary in-road or roadside first responder vehicles tending to pre-existing collision scenes, according to NHTSA. The original preliminary evaluation was later upgraded to an Engineering Analysis (EA) to extend the existing crash analysis, evaluate additional data sets, perform vehicle evaluations, and to explore the degree to which Autopilot and associated Tesla systems may exacerbate human factors or behavioral safety risks by undermining the effectiveness of the driver’s supervision.